AI Sargalay — LLM API Gateway

Full-stack LLM reselling platform for Myanmar developers. Fastify 5 + Bun backend acts as a transparent proxy to 300+ AI models with real-time usage-based billing, balance-first proxy guard, and MMPay/MMQR integration for local Myanmar payments. Includes a Next.js 15 portal with custom i18n (English + Burmese), a streaming AI assistant with search_models tool and conversation summarization, and non-blocking model price caching.

AI Sargalay — LLM API Gateway

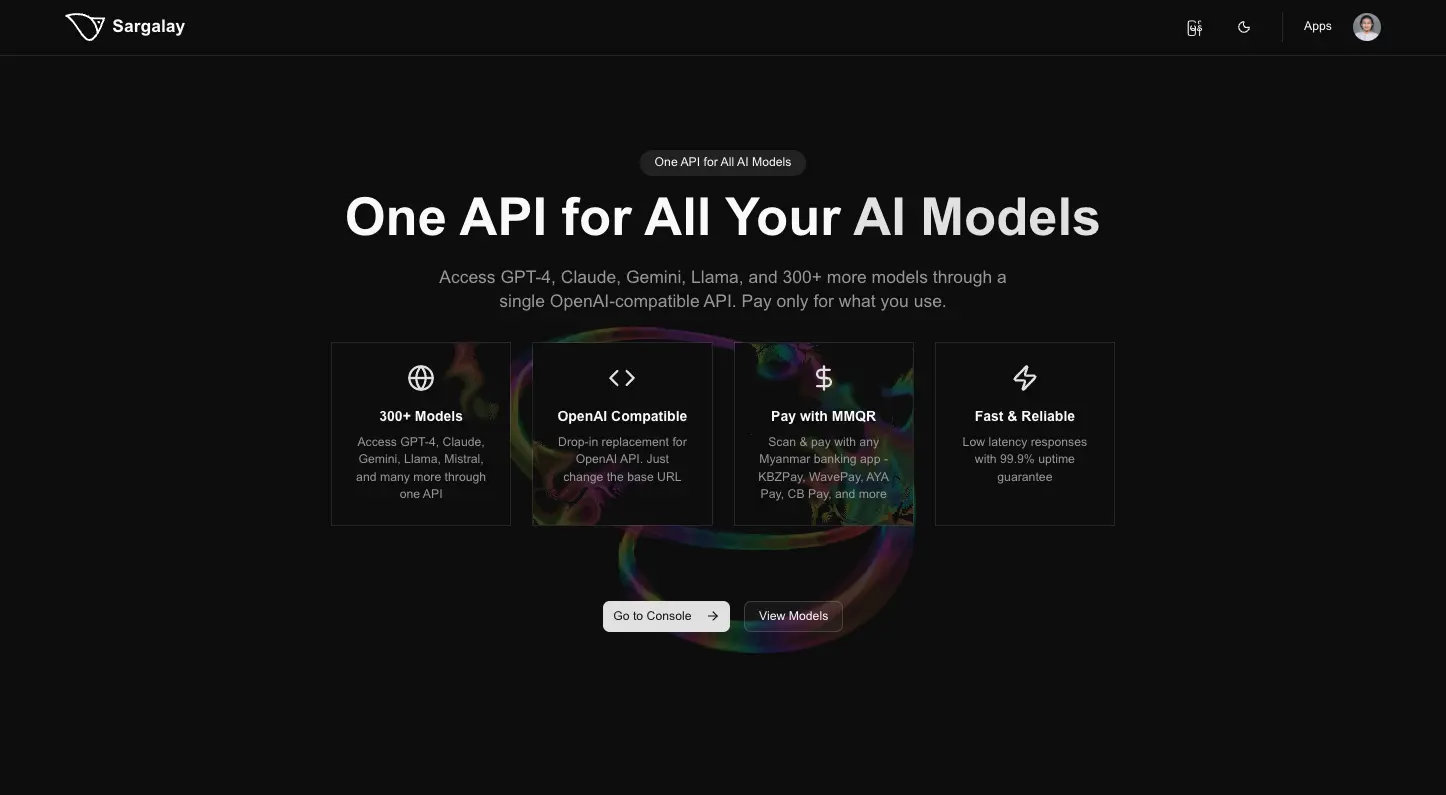

A full-stack LLM reselling platform built for Myanmar developers, providing OpenAI-compatible access to 300+ AI models (GPT-4, Claude, Gemini, Llama) with local payment integration.

Overview

AI Sargalay makes frontier AI models accessible to Myanmar developers through a unified OpenAI-compatible API with usage-based billing and local payment support. Developers get a single API endpoint and top up their balance using any Myanmar banking app.

Key Features

- 300+ Models — OpenAI-compatible API access to GPT-4, Claude, Gemini, Llama, and more

- Real-Time Billing — Actual token costs are captured from upstream response headers, marked up, and deducted from user balances immediately — no estimation error

- Balance-First Guard — Balance is validated before every proxied request, preventing users from consuming credits they can't pay for

- MMPay / MMQR Payments — Top up with KBZPay, WavePay, AYA Pay, or CB Pay — any Myanmar banking app

- Multilingual Portal — Custom i18n system (English + Burmese) built with React Context and JSON message files, no library dependency

- AI Assistant — Streaming chat assistant that knows the platform: available models, pricing, payment methods, and integration guides

Architecture

Monorepo — Separate Deployments

ai-gateway/

├── apps/

│ ├── api/ → Railway (Fastify 5, Bun runtime)

│ └── web/ → Vercel (Next.js 15)

└── packages/

├── @ai-gateway/types — shared Zod schemas (single source of truth)

├── @ai-gateway/db — Drizzle ORM schema + migrations

└── @ai-gateway/config — shared TypeScript configs

Both apps share JWT_SECRET and ADMIN_KEY so JWTs issued by NextAuth are verifiable by Fastify without a round-trip. Packages are consumed as TypeScript source (no build step), so changes reflect instantly across apps during development.

Price Caching

Model pricing is fetched from upstream and cached in PostgreSQL with a 1-hour TTL:

- Check DB — if models are less than 1 hour old, return immediately

- If stale, fetch fresh models in the background (non-blocking)

- Diff with

modelsAreDifferent()— only write to DB if pricing or model IDs actually changed - Pricing is transformed on read:

costUSD × USD_TO_MMK_RATE × PRICE_MARKUPwith safe-parsing guards to prevent NaN/negative values

The API never blocks on an upstream fetch for cached data, and the database is only written when something changes.

AI Assistant

Streaming chat assistant built with Vercel AI SDK + Google Gemini 2.0 Flash:

search_modelstool — queries the live model catalog filtered by modality, architecture, or price, returning top 5 matches- Conversation summarization — older messages are compressed into a rolling summary every 8 turns, keeping token usage low without losing context

- Multilingual — responds in Burmese or English based on the user's language preference

- Safety limits — max 10 messages, 500-char content cap, 2 tool-call steps per turn

Key Technical Decisions

- Proxy billing over estimation — costs are read from actual upstream response headers, eliminating estimation drift

- Shared Zod schemas — single source of truth for API validation, OpenAPI docs, and TypeScript types across frontend and backend

- Non-blocking price sync — model cache updates run async so API latency is unaffected by upstream fetches

- Separate deployments from one repo — Vercel and Render each build only their respective app using scoped build commands

Tech Stack

Backend: Fastify 5, Bun, TypeScript, Drizzle ORM, PostgreSQL, Zod

Frontend: Next.js 15, React Query, shadcn/ui, Tailwind CSS, Vercel AI SDK

Auth: GitHub OAuth (NextAuth) → short-lived JWT verified by Fastify

Payments: MMPay / MMQR (KBZPay, WavePay, AYA Pay, CB Pay)

Role

Solo Full-Stack Developer — end-to-end design and implementation of backend API, billing engine, portal, payment integration, AI assistant, and monorepo tooling.